Generative AI is a type of artificial intelligence technology that can produce new content, including images, videos, audio and text. This content is made with software that can create something new based on written prompts or commands.

For example, someone used a generative AI program to create an image of the Pope wearing a white puffer coat. All someone has to do to create an image like this is write “create an image of the Pope wearing a white puffer coat,” and the program creates it.

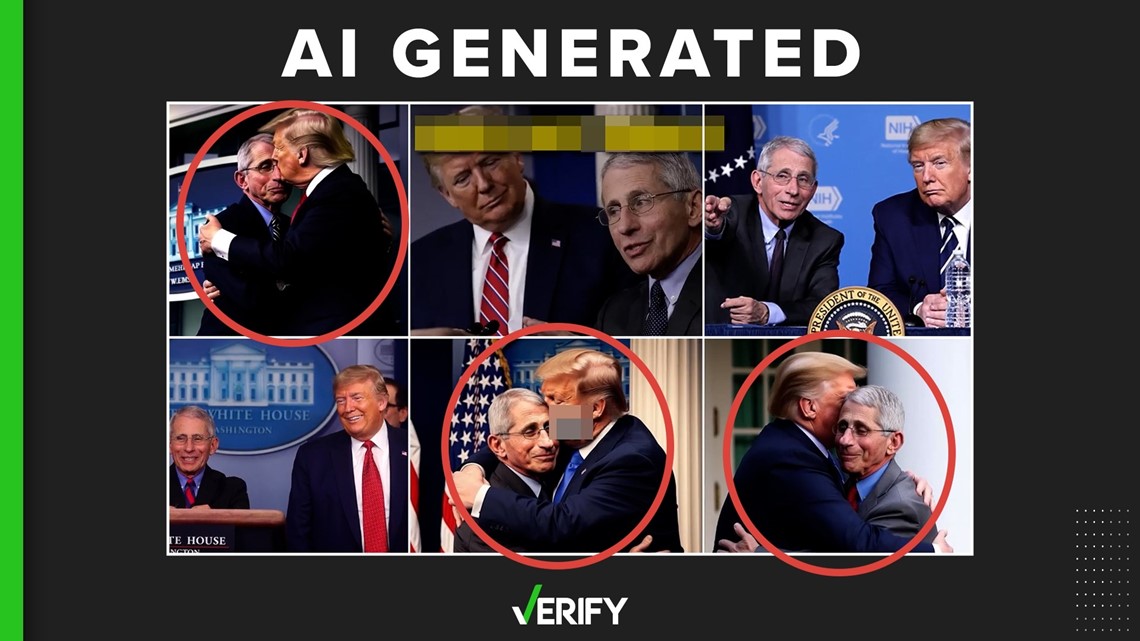

Generative AI is not only being used in humorous cases, like the Pope example. It’s also being used in some political advertising or campaigns. For example, in June 2023, we VERIFIED a campaign ad used generative AI images purporting to show former President Donald Trump and Dr. Anthony Fauci hugging.

Ahead of the New Hampshire primary on Jan. 23, a robocall impersonating President Joe Biden’s voice spread disinformation by telling people to not to vote in the election.

As the 2024 election ramps up, there has been growing concern about generative AI’s use in political or election material. Lawmakers and the Federal Election Commission (FEC) have considered rules for the use of AI in political advertising, but there currently are no laws against it.

Tech companies like Meta require disclosures about generative AI in political advertising on its platforms. YouTube and TikTok also require content creators to label any realistic generative AI in political content.

OpenAI, the parent company of ChatGPT, recently announced that politicians or anyone affiliated with campaigns are barred from using their generative AI tools to create content for political campaigns.

With generative AI becoming more prevalent ahead of the 2024 presidential election, VERIFY is here to help distinguish what’s real and what’s not.

THE SOURCES

- Siwei Lyu, Ph.D, director of the UB Media Forensic Lab at SUNY Buffalo

- White House Press Secretary Karine Jean-Pierre

- The New Hampshire Attorney General’s Office

- InVid and RevEye, footage forensics tools

- “Beat Biden,” an ad released by the Republican Party

- “Who’s More Woke,” a radio ad released by Courageous Conservatives PAC

- “Trump Attacks Iowa,” an ad released by the Never Back Down PAC

- X post from Argentine President Javier Milei published in October 2023

- @kukartificial, an AI artist on Instagram

- Truth Social post from Trump published on July 10, 2023

WHAT WE FOUND

If you see a political ad and are wondering if it was made using AI, here are some tips to spot red flags:

- Disclaimer: Check to see if there is a disclaimer that the ad contains AI. There could be a small note at the top of a video, audio narration or form of source citation.

- Details: AI creation tools can struggle with details. Often, a person seen in an AI-generated image or video has misshapen or distorted features. Numbers, letters, textures and background details are often also distorted or over-stylized in AI content.

- Audio: AI-generated audio often lacks exact speech patterns, tone, inflection and the sound of a person taking a breath. Listen for those signals.

- Reverse search: Candidates often have a large media presence and are photographed frequently. Conduct a reverse image search on any images or frames in a video to see if it’s been published by a reputable source. If it hasn’t, that’s a red flag it could be AI.

- Check the context: Research the source that released the ad, check for any biases and learn more about the candidates or issues mentioned in the advertisement. Additionally, check for any published reputable sources that corroborate what you are seeing or hearing. If what’s represented in the ad seems out of character or extreme, look for other examples that support or refute the ad.

Now that we have given you this list of ways to help you spot AI, let’s go through some examples of how we used those tips to VERIFY whether political ads were made with AI.

1. Disclaimer

Some social media platforms now require political advertisements that use generative AI to have labels, or disclaimers, that the video was created using generative artificial intelligence technologies. This could be in the fine print, small text, audio narration, or a credit in the video or image.

This is one of the first things we look for when fact-checking an image or video. Is there a clear disclaimer that the video was created with AI? Was it shared from a social media or online account whose bio states that they create AI content?

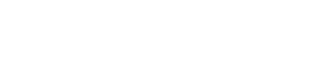

VERIFY found a disclaimer in small text on this political ad released in April 2023 by the Republican party, titled “Beat Biden.” In the top left-hand corner of the video, a disclaimer says: “Built entirely with AI imagery.” The text is hard to see, but it's there.

The caption of the “Beat Biden” video published to YouTube also says it is “an AI-generated look into the country's possible future if Joe Biden is re-elected in 2024.”

Another example of a disclaimer can be heard in this radio ad released by the Courageous Conservatives PAC, a political action committee (PAC) that supports various conservative candidates at the state and federal levels. The ad targeted Nikki Haley and Tim Scott. Haley is still in the running for president, while Scott ended his campaign in November 2023.

In the radio ad, titled “Who’s More Woke,” Haley and Scott appear to be answering questions in a game-show format. At the beginning of the ad, a fast-talking narration says their voices were recreated using AI.

Not all generative AI will include a disclaimer and it’s not always legally required.

2. Details

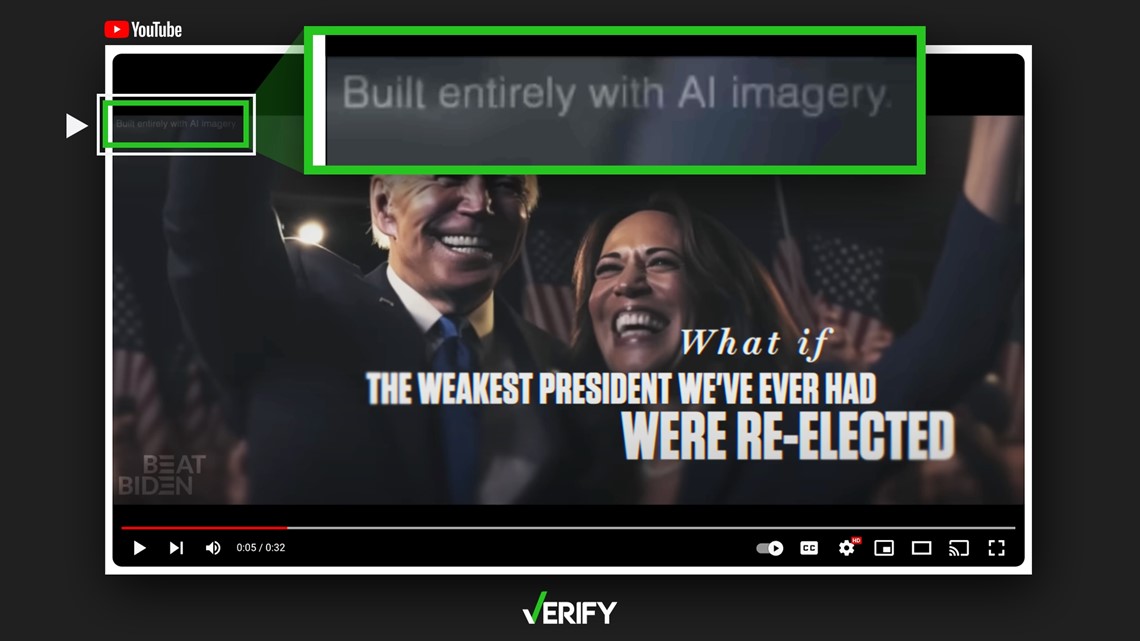

Generative AI technologies can struggle with getting certain details right, like facial features, human body shapes, patterns and textures. For instance, hands or fingers may appear misshapen or distorted and skin might look too smooth.

In the “Beat Biden” ad, Biden is seen standing with his arm around Vice President Kamala Harris. While it does generally look like Biden, his mouth is distorted and out of proportion. Later in the video, a crowd of people can be seen standing next to a building. Some of the people in the crowd are misshapen, for example, one man’s head is stretched. Both of these are signs it’s AI-generated.

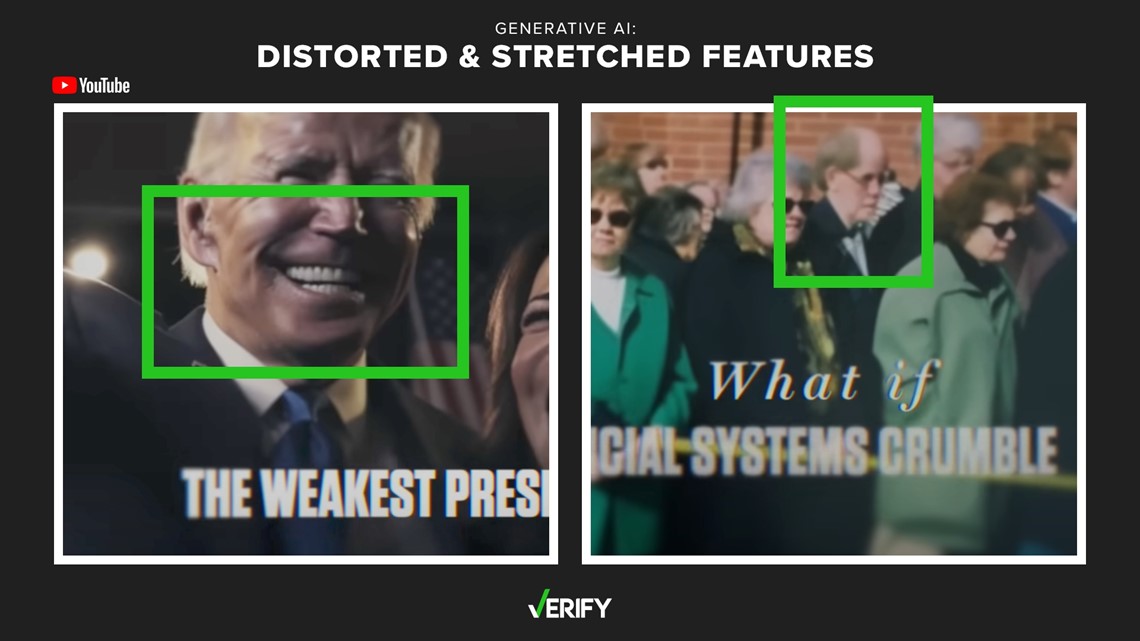

Artificial intelligence was used in campaign material and in forms of propaganda during Argentina’s presidential election held in October 2023.

The Libertarian party candidate, Javier Milei, posted an image of his opponent Sergio Massa, a member of the Union for the Homeland party, appearing to show Massa standing in a pose reminiscent of a Chinese communist leader.

VERIFY also found pro-Massa posters that appear to show Massa in prominent positions among his constituents.

While these are artistic interpretations of the candidates in various positions or forms, we could tell each of these were created using artificial intelligence because of the stylized representation, the lack of scale or proportions in the images and the lack of texture.

The pro-Massa posters were shared by an Instagram user who clearly stated in the caption it was created with AI.

3. Audio

AI-generated audio can be particularly concerning because it's harder to spot AI markers in sound, like in the case of radio ads or phone calls.

There are ways to tell if audio in an advertisement is AI. First, listen for the tone and pace of the voices. Do they sound too robotic? Is there little to no tone or inflection? Is there breathing? When people talk, you can typically hear breaths and other sounds.

Ahead of the Jan. 23 New Hampshire primary, an audio recording of a robocall was obtained by various news outlets that sounded like President Joe Biden urging people not to vote in the primary.

“Voting this Tuesday only enables the Republicans in their quest to elect Donald Trump again,” the audio says.

Siwei Lyu, Ph.D, director of the UB Media Forensic Lab at SUNY Buffalo and deepfake expert, told VERIFY in an email that after he analyzed the audio, he found a lack of intonation variations that are present in Biden’s actual voice.

Biden is known for having a stutter, which occasionally causes him to stammer while speaking. In the audio recording, we don’t hear any signs of a stutter. The audio also sounds robotic, and there are no audible intakes of breath between the words or sentences, Lyu said. Those are signs that this robocall was created with AI.

White House Press Secretary Karine Jean-Pierre also confirmed the audio was fake.

In the “Who’s More Woke” radio ad, the audio of Nikki Haley and Tim Scott had the same speed and lack of inflection. You also can’t hear either voice taking breaths between sentences. That is a sign of audio generated by AI. There was also a disclaimer at the beginning of the ad.

Shamaine Daniels, a Democratic candidate running for Congress in Pennsylvania, is using an artificial intelligence “volunteer” named Ashley to make robocalls to constituents. Reuters published a video showing someone receiving a call from Ashley.

Even though the disclaimer is provided, the voice still sounds robotic and not humanlike.

In a political ad titled “Trump Attacks Iowa” from pro-Ron DeSantis PAC Never Back Down, we VERIFIED AI-generated audio was used for a portion of the ad with audio that sounds like Trump’s voice.

The ad starts by praising the accomplishments of Iowa Gov. Kim Reynolds.

“Governor Kim Reynolds is a conservative champion. She signed the Heartbeat Bill and stands up for Iowans every day, so why is Donald Trump attacking her?” the ad says.

Audio that sounds like Trump then can be heard reciting: “I opened up the governor position for Kim Reynolds and when she fell behind, I endorsed her, did big rallies and she won. Now, she wants to remain ‘neutral.’ I don’t invite her to events!”

The audio that is supposedly Trump sounds robotic, and there are no pauses between the words or sentences. At the end of the quote, the enunciation on the word “her” is inconsistent with the rest of the audio in the recording. Those are key signs the audio was AI-generated.

4. Reverse search

When VERIFY fact checks images or video, we conduct a reverse image search to determine if it has existed previously on the internet, if it’s been edited from a previous version or is being shared out of context. For example, an old tornado photo may be shared out of context in relation to a current weather event. But if a photo or video is created with generative AI, there often won’t be a match when we reverse search. This is a red flag that it could be created with AI.

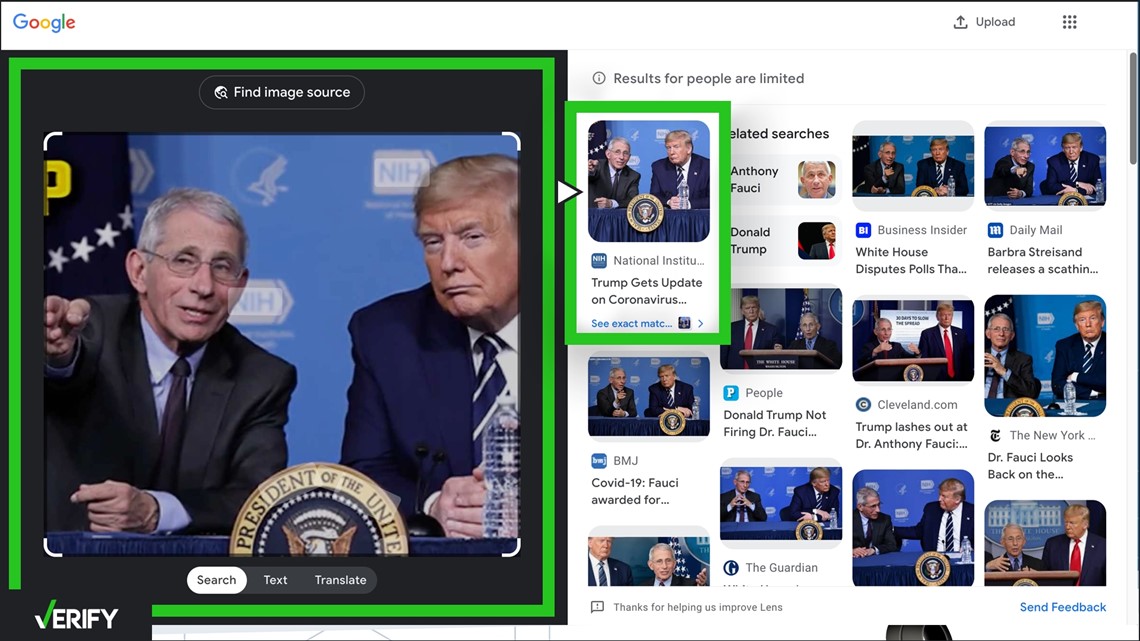

To conduct a reverse image search through Google from your computer, follow these steps:

- Right-click on the photo in your Chrome browser and select “Search image with Google.” The results page will show you other places the image may have appeared.

- You can also go to images.google.com, click on the camera icon, and upload an image that you’ve saved to your computer. This should also show a results page.

Google also has guides to reverse image searching from your iPhone or Android device.

RevEye is a browser extension you can install that also allows you to conduct a reverse image search by right-clicking any image online.

A website called TinEye also allows you to upload an image from your computer to find where it has appeared online.

When we fact-checked the political ad that appeared to show images of Trump hugging Fauci, we conducted a reverse image search.

VERIFY isolated each of the six pictures included in the collage portion of the video, and used reverse image search to identify whether they had been published before. You can isolate pictures in a video using a screenshot tool, or an online video analyzer such as InVid.

Three of the images – where Trump is standing or sitting near Fauci – had been published before by reputable news organizations or the photographers who took the image at public events in March of 2020.

When we reverse image searched three of the images showing Trump hugging Fauci, we found no instances of the two embracing before the ad aired. Given the constant presence of media at public events for the president – and especially for appearances alongside Fauci during the COVID-19 pandemic – any such hug would have been photographed and widely published.

This is an indicator those three were made with AI.

5. Check the context

As with anything you might see on social media or television, or hear on the radio, you should always check the context. This includes looking into the organization that made the ad and learning more about the candidates or issues that might be the subject of the ad. This is helpful guidance for anything you see – not just AI-generated content.

When we say check the context, that includes but isn’t limited to:

- Do a gut check. Does what you're seeing or hearing make sense? If it feels off, do an internet search of the topic and the person.

- Look for reputable articles, resources or primary sources that back up any claim.

- Check for any official statements from the subject of the content in question.

- Look for pieces of information or details that are inconsistent with others that you have read or seen.

- Look for fact-checks of the content from fact-checking sites such as VERIFY.

In the “Trump Attacks Iowa” example, VERIFY checked the context. The ad said the statement was made on July 10, 2023, and we conducted an internet search for any interviews and keywords that proved Trump actually said the quote heard in the ad. We found no evidence Trump recorded that quote.

We did, however, find the exact quote, word-for-word, in a Truth Social post from Trump published on July 10, 2023. Based on the red flags we identified when we were listening for audio details before, we concluded that the ad creators took Trump’s post and used generative AI to turn that into audio.

Trump’s campaign supervisor also gave statements to Politico and CBS News that confirmed the audio was fabricated.

When we first heard the imitation Biden robocall, we checked with reputable sources to confirm it wasn't Biden's voice on the call.

The New Hampshire Attorney General’s Office confirmed that the “robocall sounds like the voice of President Biden” but “this message appears to be artificially generated based on initial indications.” The attorney general’s office has launched an investigation.

During a Jan. 22 press briefing, the White House also confirmed Biden did not make those calls and it was not his voice.

Generative artificial intelligence technologies are improving rapidly, making detection more difficult. While there are AI detection tools available online, they aren’t 100% reliable. Some of the tools that can be helpful include Deepware AI’s scanner or AI Voice Detector, but they should only be used to help spot red flags and not as the only way to verify whether something is real.

If you have questions about AI, or if you think something was created with AI and want VERIFY to check it out, email us at questions@verifythis.com or tag us on social media @verifythis.